| Arabic Text Detection |

Optical Character Recognition (OCR) |

|

</td>

</tr>

</table>

## Datasets </td>

</tr>

</table>

## Datasets

| Name |

Description |

| Arabic Digits |

70,000 images (28x28) converted to binary from Digits |

| Arabic Letters |

16,759 images (32x32) converted to binary from Letters |

| Arabic Poems |

146,604 poems scrapped from aldiwan |

| Arabic Translation |

100,000 paralled arabic to english translation ported from OpenSubtitles |

| Product Reviews |

1,648 reviews on products ported from Large Arabic Resources For Sentiment Analysis |

| Image Captions |

30,000 Image paths with captions extracted and translated from COCO 2014 |

| Arabic Wiki |

4,670,509 words cleaned and processed from Wikipedia Monolingual Corpora |

| Arabic Poem Meters |

55,440 verses with their associated meters collected from aldiwan |

| Arabic Fonts |

516 100×100 images for two classes. |

## Tools

To make models easily accessible by contributers, developers and novice users we use two approaches

### Google Colab

[Google colaboratory](https://colab.research.google.com/) is a free service that is offered by Google for research purposes. The interface of a colab notebook is very similar to jupyter notebooks with slight differences. Google offers three hardware accelerators `CPU, GPU` and `TPU` for speeding up training. We almost all the time use `GPU` because it is easier to work with and acheives good results in a reasonable time. Check this great [tutorial](https://medium.com/deep-learning-turkey/google-colab-free-gpu-tutorial-e113627b9f5d) on medium.

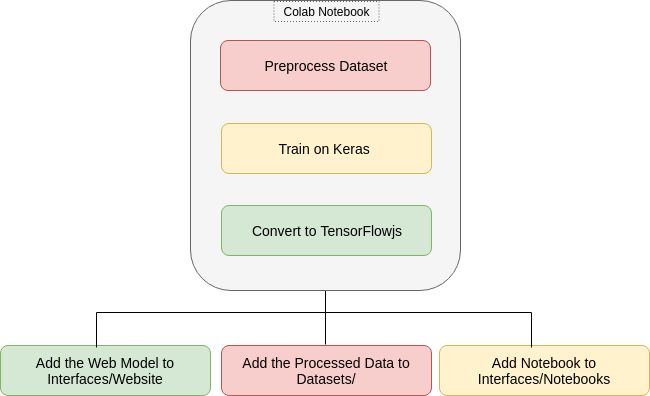

### TensorFlow.js

TensorFlow.js is part of the TensorFlow ecosystem that supports training and inference of machine learning models in the browser. Please check these steps if you want to port models to the web:

1. Use keras to train models then save the model as `model.save('keras.h5')`

2. Install the TensorFlow.js converter using `pip install tensorflowjs`

3. Use the following script to `tensorflowjs_converter --input_format keras keras.h5 model/`

4. The `model` directory will contain the files `model.json` and weight files same to `group1-shard1of1`

5. Finally you can load the model using TensorFlow.js

Check this [tutorial](https://medium.com/tensorflow/train-on-google-colab-and-run-on-the-browser-a-case-study-8a45f9b1474e) that I made for the complete procedure.

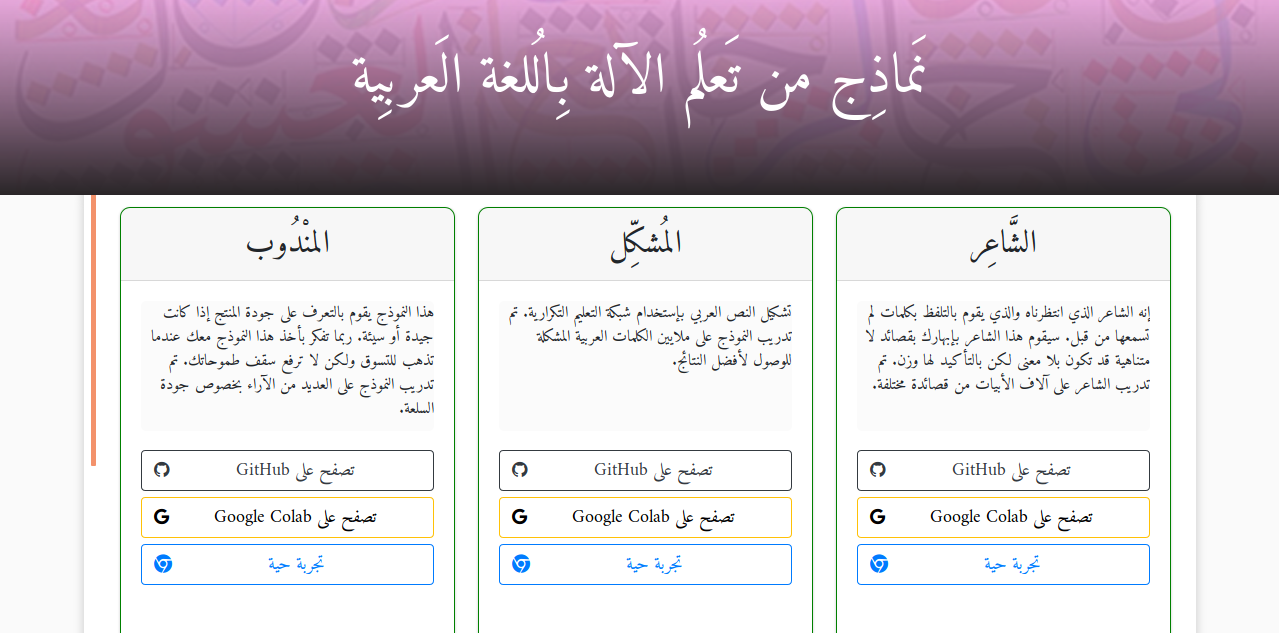

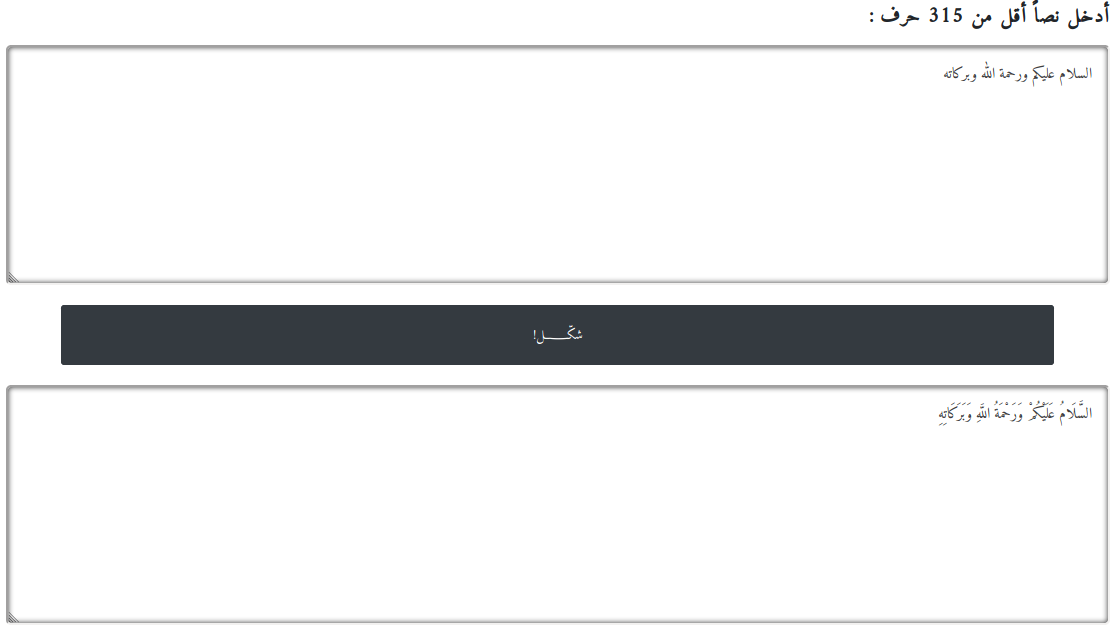

## Website

We developed many models to run directly in the browser. Using TensorFlow.js the models run using the client GPU. Since the webpage is static there is no risk of privacy or security. You can visit the website [here](https://arbml.github.io/ARBML/Interfaces/Website/) . Here is the main intefrace of the website

The added models so far

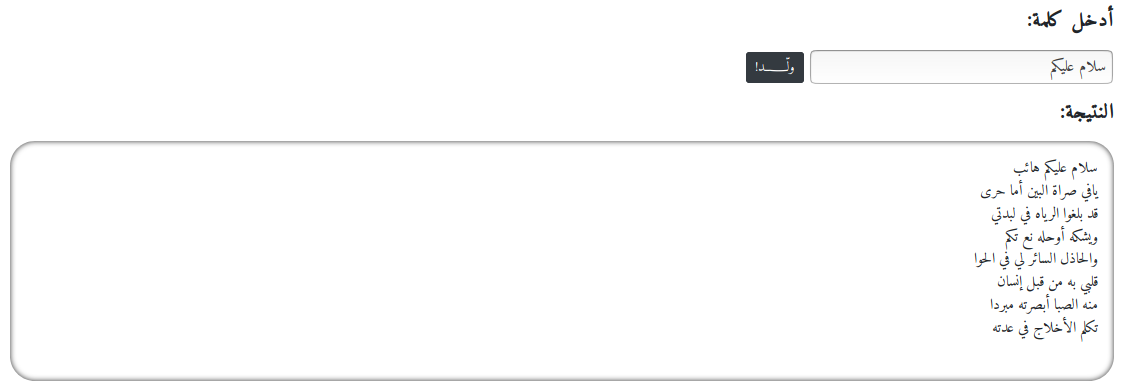

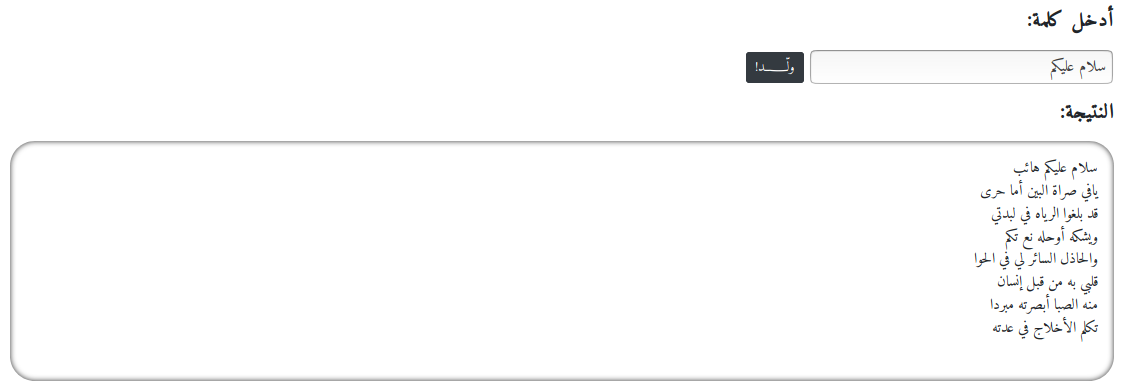

### Poems Generation

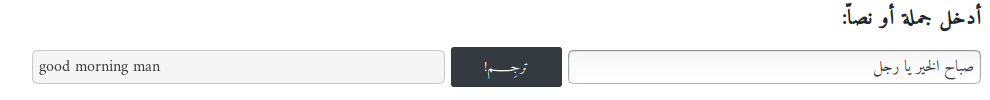

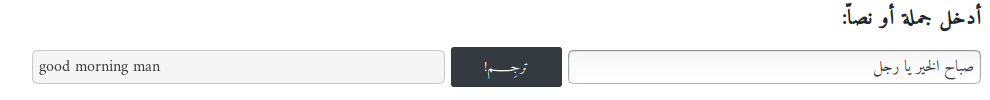

### English Translation

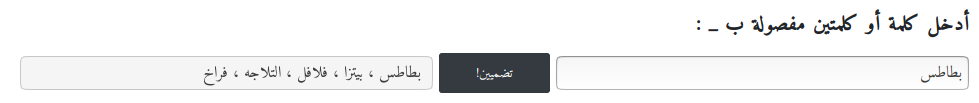

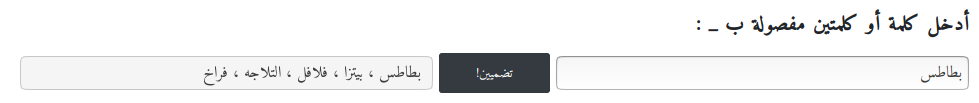

### Words Embedding

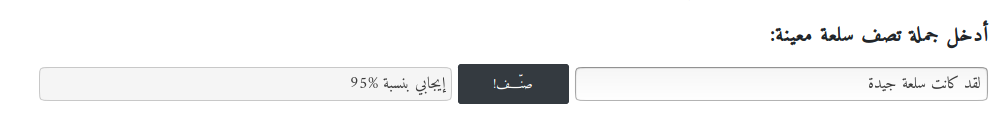

### Sentiment Classification

### Image Captioning

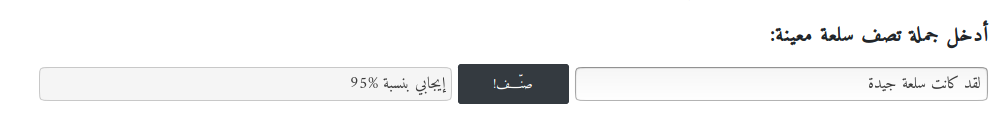

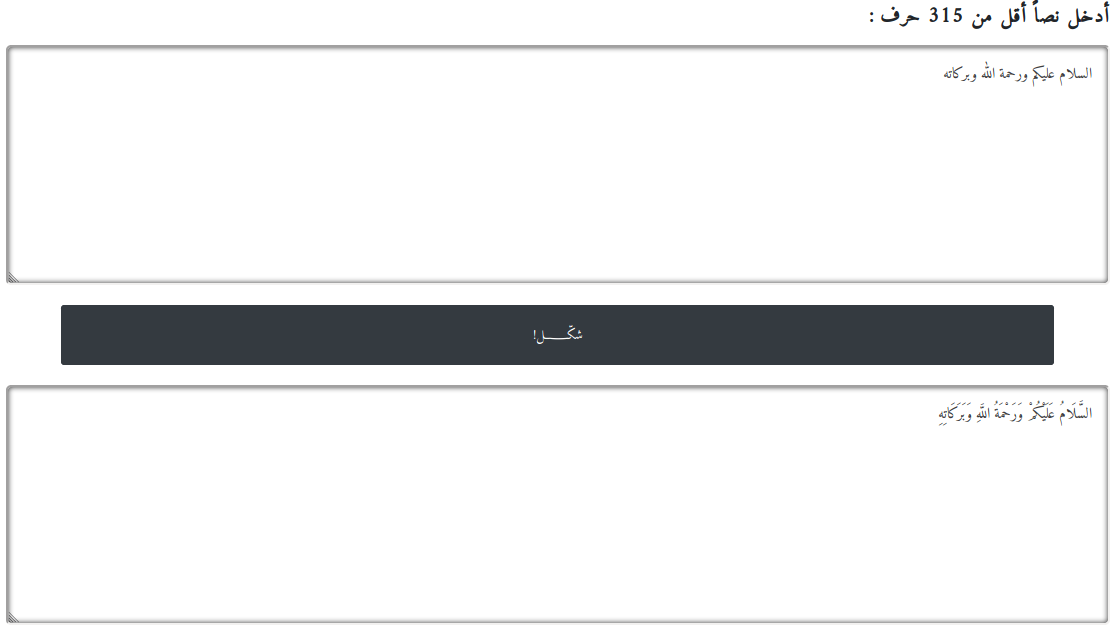

### Diactrization

## Contribution

Check the [CONTRIBUTING.md](https://raw.githubusercontent.com/zaidalyafeai/ARBML/master/CONTRIBUTING.md) for a detailed explanantion about how to contribute.

## Resources

As a start we will start on Github for hosting the website, models, datasets and other contents. Unfortunately, there is a limitation on the space that will hunt us in the future. _Please let us know what you suggest on that matter_.

## Contributors

Thanks goes to these wonderful people ([emoji key](https://allcontributors.org/docs/en/emoji-key)):

This project follows the [all-contributors](https://github.com/all-contributors/all-contributors) specification. Contributions of any kind welcome!

## Citation

```

@inproceedings{alyafeai-al-shaibani-2020-arbml,

title = "{ARBML}: Democritizing {A}rabic Natural Language Processing Tools",

author = "Alyafeai, Zaid and

Al-Shaibani, Maged",

booktitle = "Proceedings of Second Workshop for NLP Open Source Software (NLP-OSS)",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.nlposs-1.2",

pages = "8--13",

}

```

|