Abstract

Calligraphy is an essential part of the Arabic heritage and culture. It has been used in the past for the decoration of houses and mosques. Usually, such calligraphy is designed manually by experts with aesthetic insights. In the past few years, there has been a considerable effort to digitize such type of art by either taking a photo of decorated buildings or drawing them using digital devices. The latter is considered an online form where the drawing is tracked by recording the apparatus movement, an electronic pen for instance, on a screen. In the literature, there are many offline datasets collected with a diversity of Arabic styles for calligraphy. However, there is no available online dataset for Arabic calligraphy. In this paper, we illustrate our approach for the collection and annotation of an online dataset for Arabic calligraphy called Calliar that consists of 2,500 sentences. Calliar is annotated for stroke, character, word and sentence level prediction.

Demo

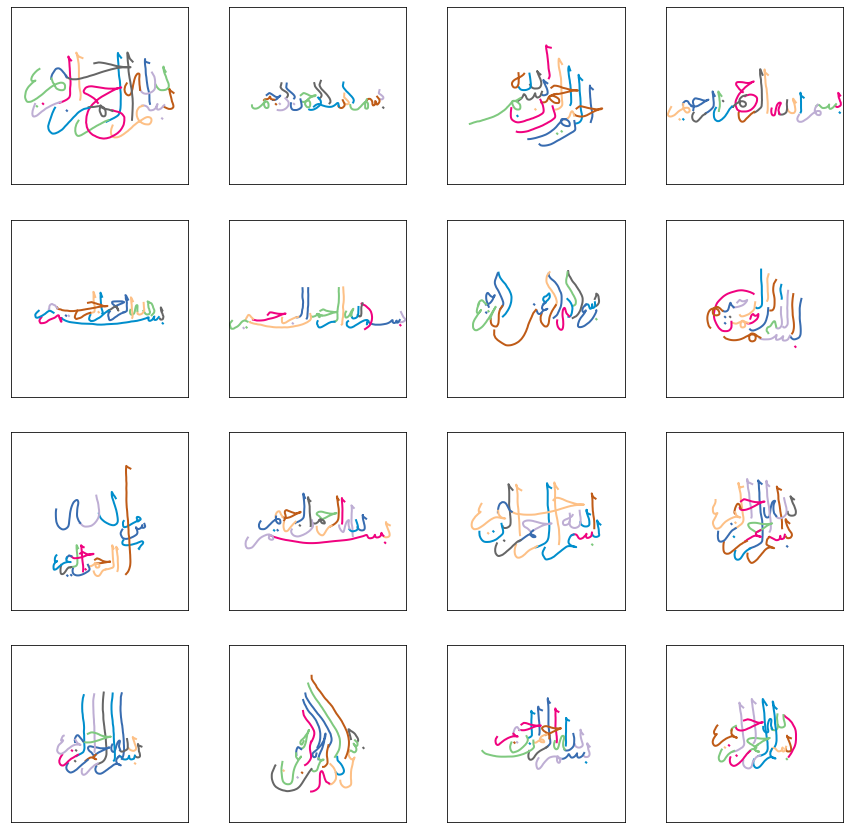

You can generate an animation of a subset of the data set by clicking the button below. Note that this runs directly in the browser hence we only used 100 samples for demonstration. The 100 json files where converted to a minified version where all points were converted to integers with no stroke annotations. At each press of generate a new sketch is rendered where each stroke is drawn in different color. The annimation speed is propotional to the length of the stroke. You will see that some strokes might have fast animations and some slow.

Collection & Annotation

The collection procedure was done during a span of months. We used some of the images that we collected to generate some calligraphy as shown in the image below. One of the main websites that we used initially is namearabic. Luckily this dataset had some text annotation and we had only to spend a few hours to annotate with drawing a few hundred images. For other datasets we had to annotate the images with text then with sketch drawing. The annotation procedure took a few weeks. We used two Samsung Galaxy Tab S6 tablets as the main devices for the annotation procedure. It took two main phases: the first one annotates each image with a text. In the second phase we used the pen of the tablet to draw the annotation. We repeated these steps multiple times to increase the size of the dataset. Because the intial dataset was 600x600 we decided to fix the max dimension to 600 and rescale the other dimension accordingly to preserve the spect ratio. During the annotation process we faced many problems like empty annotations, repeated strokes and problems with the touch screen which created wrong annotations. To deal with these problems, we made a lot of back and forth verification steps from directly annotating and then animating the results immediately using Python. We would annotate some images then remove them because they had some problems. One sanity check that used is to compare the text annoation with the json stroke annotation. This gives us some quick discovery of empty annotations. The other problems are difficult to deal with especially the problem of having wrong drawings by the tablet. We relied on post processing to get rid of such annotations by comparing the variance of the points in one stroke drawing.

Creative Applications

There is a lot of controversy in the field of AI whether we can use it to create some intelligent and artistic programs. In the last few years there has been a lot of research in applying AI creatively like Style Transfer[1], Deep Dream[2], GauGAN[3], etc. Most of these technologies apply for images. These applications apply mostly for computer vision, on the other hand it is much more difficult in natural language processing (NLP). The intrinsic difficulty lies in the complexity of modelling language, let alone creating some creative applications. One of the most interesting applications are sketch generation. One of the most interesting papers is the one Alex Graves Generating Sequences With Recurrent Neural Networks. The paper assumes the existance of an online corpus for generation stroke sequences for Engish. Building upon that, there has been many papers in that field like sketchRNN[4], GANwriting[5], Scrabble-GANs[6], DF-GANs[7], BézierSketch[8] and DoodlerGAN[9]. Most of these papers work on English and it is needless to mention how simple English is compared to other languages like Arabic. The complexity of Arabic raises from the cursive nature of connecting letters together. Not to mention the long history of Arabic calligraphy which is used extensively nowadays. The different styles of Arabic calligraphy joint with the freedom of drawing some letters makes the problem much harder. Being the only dataset that collect online stroke information for different calligraphic styles, this opens the door for many applications. The difficulty still lies in modelling natural language from the strokes. There is a trade-off between creating nice strokes and generating sensible language. In the literature, most of the generated strokes are usually words not sentences. In Arabic calligraphy, creating words is not an interesting problem. Much of the beauty is from following style in addition to conditioning the words to specific text view which is usually a circle like in Diwani and square like in Kufi. A proper contribution that we want to achieve in the future is to generate calligraphy conditioned on style and text.

Tweet

Pleased to announce Calliar, the first online dataset for Arabic Calligraphy. Joint work with @_MagedSaeed_ @alwaridi and Yousif Al-Wajih.

— Zaid زيد (@zaidalyafeai) June 22, 2021

Paper: https://t.co/q5mwcP6roa

Code & data: https://t.co/tDCPJRYUcl

Colab: https://t.co/46yD0V3wht pic.twitter.com/qbbb4tZJ6l

Videos

@misc{alyafeai2021calliar,

title={Calliar: An Online Handwritten Dataset for Arabic Calligraphy},

author={Zaid Alyafeai and Maged S. Al-shaibani and Mustafa Ghaleb and Yousif Ahmed Al-Wajih},

year={2021},

eprint={2106.10745},

archivePrefix={arXiv},

primaryClass={cs.CL}

}References

- Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. "Perceptual losses for real-time style transfer and super-resolution." European conference on computer vision. Springer, Cham, 2016.

- Mordvintsev, Alexander, Christopher Olah, and Mike Tyka. "Inceptionism: Going deeper into neural networks." (2015).

- Park, Taesung, et al. "GauGAN: semantic image synthesis with spatially adaptive normalization." ACM SIGGRAPH 2019 Real-Time Live!. 2019. 1-1.

- Ha, David, and Douglas Eck. "A neural representation of sketch drawings." arXiv preprint arXiv:1704.03477 (2017).

- Kang, Lei, et al. "GANwriting: Content-conditioned generation of styled handwritten word images." European Conference on Computer Vision. Springer, Cham, 2020.

- Fogel, Sharon, et al. "ScrabbleGAN: semi-supervised varying length handwritten text generation." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020.

- Tao, Ming, et al. "Df-gan: Deep fusion generative adversarial networks for text-to-image synthesis." arXiv preprint arXiv:2008.05865 (2020).

- Das, Ayan, et al. "BézierSketch: A generative model for scalable vector sketches." arXiv preprint arXiv:2007.02190 (2020).

- Ge, Songwei, et al. "Creative Sketch Generation." arXiv preprint arXiv:2011.10039 (2020).